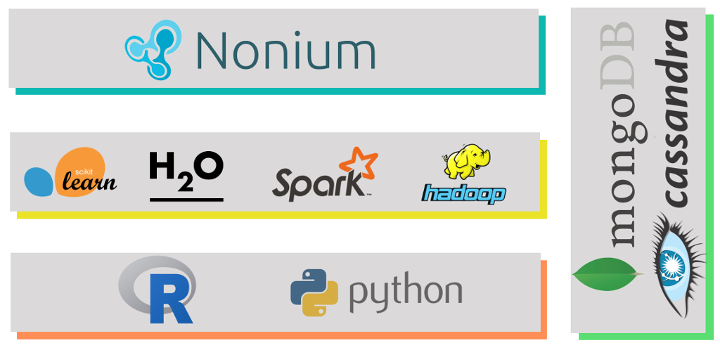

Nonium

Nonium (from term nonius, device that improves accuracy by using an auxiliary scale) is our smart analytics framework, which covers the whole predictive modeling cycle. It is structured in smart infocells: blocks performing tasks within the data analysis process that interact with other infocells and take decisions about the features to be used during the modeling process and about the process itself.

VE

This infocell decides whether a feature is nominal, ordinal or numeric, when the feature type is unknown (blind datasets).

It also explores each variable distribution detecting singular values as errors or some representation of missing values.

VR

Variable Representation

This infocell answers questions like:

- How many observations within each nominal feature level should be kept in the model (or otherwise merged to an 'others' level)?

- When is it necessary to reorganize the levels within a nominal feature and how?

- When is it better to use a one-hot representation of an ordinal or nominal variable in tree based models?

- Our experience is that variable representation can contribute up to 5-10% of average improvement in model performance.

NS

Normalization and Scaling

Depending on the model we are fitting and the nature of the variables we are working with, it might be necessary to transform some of them (like counters, quantities or elapsed times) in order to improve model performance.

FS

Feature Selection

The results obtained are by far better than standard approaches of the problem (Recursive Feature Elimination, mRMR, Boruta, Lasso, ...).

We usually reach double digit improvements in several metrics (auc, rmse, logloss, normal discount cumulative gain, mean average precission...) over a fresh test set.

PT

Parameter Tuning

This step is critical for most existing models and interacts with other infocells, like Variable Representation or Feature Selection.

MB

Model Benchmarking

- Non linear models:

- Support vector machine (non linear kernel)

- Random Forest

- Gradient Boosting (first order derivatives)

- Extrem Gradient Boosting (second order derivatives)

- Neural Networks

- Linear models:

- Support vector machine (linear kernel)

- Elastic net (Ridge and Lasso regularization coordinate descendent)

- Linear Extreme Gradient Boosting (second order derivatives)

FI

Feature Importance

Most out-of-the-box procedures for relative importance assessment of variables are biased and overfitted.

MD

Marginal Dependence

This is very useful to understand the relationship between variables and the response in black box models.

ME

Model Ensembling

This provides a gold standard model and allows comparing the relative performance of each model with it.

ML

Meta Learning

We currently use a ruled based system to select initial values, options and parameter ranges for each model. In the near future this will change:

We are currently working in a meta learning model that uses topological descriptors of the dataset in order to select the most suitable model family, parameter ranges where the model will be optimal and variable transformations that will perform better. This allows obtaining an optimal solution without the need of computing a huge number of models.